By optimizing Ghost's Redis caching, CarExplore slashed database query times from 600ms to 150ms, improving page loads by 70%—and now all Magic Pages.

When CarExplore.com.au's database queries started taking 600ms, I knew something was wrong. This automotive site with 1,600+ articles was slowing down, and traditional solutions weren't going to cut it.

Here's how I turned those 600ms queries into 150ms responses using Ghost's undocumented Redis caching—and why every Magic Pages customer now benefits from this optimization.

The Growing Pains of Success

CarExplore represents the perfect test case for Ghost performance optimisation. They have built a comprehensive resource covering everything from electric vehicle reviews to classic car restoration guides. Their content structure did not push Ghost to its limits, but it certainly showed some challenges:

- 1,600+ articles spanning news, reviews, and buyer's guides

- Complex tagging system: manufacturer, model, price range, fuel type, body style

- 8 contributing authors with their own article collections

- Rich content relationships that readers navigate daily

What slowed Ghost's queries down was the tagging system and the way their theme was built, since it sent lots of queries to make the site look like it looks.

Dylan and his team have done everything right - quality content, consistent publishing, smart categorization. But with all of that the data grew and grew. And more data means slower queries.

At one point or another, most successful Ghost blogs will face this. However, it was the first time at Magic Pages that it had such an impact. So, time for optimisations!

The Challenge

The symptoms were clear in the logs:

WARN {{#get}} helper took 556ms to complete

WARN {{#get}} helper took 633ms to complete

WARN {{#get}} helper took 589ms to completeThese warnings appeared constantly, indicating that CarExplore's homepage was making multiple database queries every time someone visited. Each {{#get}} helper in their theme – fetching latest articles, featured reviews, trending content – was taking 500-600ms.

Magic Pages includes a CDN for every Ghost site on the Pro plan – BunnyCDN serves cached content from edge locations worldwide. But even the best CDN has its limitations.

When Dylan and his team publish a new article or updates existing content, Ghost invalidates the CDN cache to ensure readers see fresh content. That is done on purpose, since you don't want people to see old, outdated content. After that invalidation, someone has to be the first visitor after cache expires.

Additionally, any member-only content, previews, and admin pages bypass the CDN entirely.

When any of these scenarios occur, the request goes straight to the origin server. And that's when those 600ms queries stack up. A page with a couple of {{#get}} queries means a bit of waiting time before the server even starts sending HTML back to the visitor.

For a high-traffic site like CarExplore, this happens a few times per day. Each slow origin response is a reader who might not wait around, a potential customer who bounces, or a search crawler that downgrades the site's speed score.

Why Traditional Solutions Fall Short

CarExplore's database was already well-resourced – fast NVMe SSDs, plenty of RAM. The problem wasn't hardware; it was query complexity. When you ask Ghost to find posts tagged "Electric Vehicles" AND "Under $50k" AND authored by "Dylan" with related metadata, that's a complex operation across multiple database tables.

You could try other approaches, such as database indexing, but Ghost already creates appropriate indexes. What about query optimisation? That's also something Ghost does under the hood with Knex as ORM (Object Relational Mapping) layer.

So, we need to cache. But where?

CDN caching helps with static content, but dynamic queries still hit the database. Application-level caching sits between Ghost and MySQL, storing query results in memory for instant retrieval.

And this is where things get interesting. I remembered that Ghost indeed has some internal caching, but it is deactivated by default – and not very well documented.

So, I started by looking through the configuration documentation:

There, I found a brief mention of cache adapters. However, real-life examples were missing. I now knew that Ghost has a built-in Redis cache adapter, and how it should work. But...it felt incomplete.

I then started reading through Ghost's codebase and found some interesting details: Ghost indeed has a complete Redis caching system built in, with multiple cache adapters for different types of content:

postsPublic- for caching post queriestagsPublic- for tag informationimageSizes- for calculated image dimensionslinkRedirects- for redirect lookups- And more...

But here's what the documentation doesn't tell you: simply enabling Redis isn't enough. I learned this the hard way.

My first attempt was straightforward - spin up a Redis instance in the Kubernetes cluster and point Ghost to it. The results were disappointing. Query times improved by maybe 10-15%. That's when I realized: latency was killing the benefit.

Even though Redis was in the same cluster, the Redis database could be on any of the Kubernetes nodes, meaning that the network round-trip added 5-10ms per query. When you're trying to turn 600ms queries into sub-200ms responses, every millisecond counts. With CarExplore making multiple queries per page, that's 50-100ms of network overhead alone.

So, I tried a different approach: rather than having one central Redis database for ALL Magic Pages Ghost sites, how about every Ghost site becomes its own instance? A classic sidecar? This would have the big benefit of having no network hops at all.

After testing various TTL and refresh-ahead configurations, I identified the optimal settings:

- 30-minute time to live (TTL) for post queries (fresh enough for a news site)

- 1-hour TTL for tags and authors (these change less frequently)

- Refresh-ahead factor of 0.7 (cache refreshes at 70% of TTL, preventing cold hits)

Implementation

With the optimal configuration identified, I deployed the Redis setup to CarExplore.

Here's the complete docker-compose.yml configuration (similar to Magic Pages' Kubernetes setup). Redis caching requires extensive configuration, but the results are worth it:

version: '3.8'

services:

ghost:

image: ghost:5-alpine

environment:

# Basic Ghost configuration

url: http://localhost:2375

NODE_ENV: production

# Database configuration

database__client: mysql

database__connection__host: db

database__connection__user: ghost

database__connection__password: ghostpass

database__connection__database: ghost

# =================================

# Redis Cache Configuration

# =================================

# Redis adapter configuration

adapters__cache__Redis__host: redis

adapters__cache__Redis__port: 6379

adapters__cache__Redis__keyPrefix: "ghost:"

adapters__cache__Redis__ttl: 3600

adapters__cache__Redis__reuseConnection: true

adapters__cache__Redis__refreshAheadFactor: 0.8

adapters__cache__Redis__getTimeoutMilliseconds: 5000

adapters__cache__Redis__storeConfig__retryConnectSeconds: 10

adapters__cache__Redis__storeConfig__lazyConnect: true

adapters__cache__Redis__storeConfig__enableOfflineQueue: true

adapters__cache__Redis__storeConfig__maxRetriesPerRequest: 3

# ===============================================

# Cache Types

# ===============================================

# Image sizes cache

adapters__cache__imageSizes__adapter: Redis

adapters__cache__imageSizes__ttl: 86400 # 24 hours

adapters__cache__imageSizes__refreshAheadFactor: 0.95

# GScan cache

adapters__cache__gscan__adapter: Redis

adapters__cache__gscan__ttl: 43200 # 12 hours

adapters__cache__gscan__refreshAheadFactor: 0.9

# Posts public cache

adapters__cache__postsPublic__adapter: Redis

adapters__cache__postsPublic__ttl: 1800 # 30 minutes

adapters__cache__postsPublic__refreshAheadFactor: 0.7

# Tags public cache

adapters__cache__tagsPublic__adapter: Redis

adapters__cache__tagsPublic__ttl: 3600 # 1 hour

adapters__cache__tagsPublic__refreshAheadFactor: 0.8

# Link redirects public cache

adapters__cache__linkRedirectsPublic__adapter: Redis

adapters__cache__linkRedirectsPublic__ttl: 7200 # 2 hours

adapters__cache__linkRedirectsPublic__refreshAheadFactor: 0.9

# Stats cache

adapters__cache__stats__adapter: Redis

adapters__cache__stats__ttl: 900 # 15 minutes

adapters__cache__stats__refreshAheadFactor: 0.8

# ===============================================

# Host Settings

# ===============================================

# Enable public cache features

hostSettings__postsPublicCache__enabled: true

hostSettings__linkRedirectsPublicCache__enabled: true

volumes:

- ghost-content:/var/lib/ghost/content

ports:

- "2375:2368"

depends_on:

db:

condition: service_healthy

redis:

condition: service_healthy

restart: unless-stopped

healthcheck:

test: ["CMD", "wget", "--spider", "-q", "http://localhost:2368/ghost/api/admin/site/"]

interval: 30s

timeout: 5s

retries: 3

start_period: 60s

redis:

image: redis:7-alpine

command: >

redis-server

--maxmemory 256mb

--maxmemory-policy allkeys-lru

--save ""

--tcp-backlog 128

--timeout 300

--tcp-keepalive 60

--databases 1

--hz 10

--loglevel warning

--client-output-buffer-limit "normal 0 0 0"

--stop-writes-on-bgsave-error no

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 5s

timeout: 1s

retries: 3

restart: unless-stopped

volumes:

# Optional: Enable Redis persistence if needed

# - redis-data:/data

db:

image: mysql:8.0

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_DATABASE: ghost

MYSQL_USER: ghost

MYSQL_PASSWORD: ghostpass

MYSQL_CHARACTER_SET_SERVER: utf8mb4

MYSQL_COLLATION_SERVER: utf8mb4_unicode_ci

volumes:

- db-data:/var/lib/mysql

command: >

--default-authentication-plugin=mysql_native_password

--character-set-server=utf8mb4

--collation-server=utf8mb4_unicode_ci

--innodb-buffer-pool-size=128M

--max-connections=100

healthcheck:

test: ["CMD", "mysqladmin", "ping", "-h", "localhost", "-u", "root", "-proot"]

interval: 5s

timeout: 3s

retries: 10

start_period: 30s

restart: unless-stopped

volumes:

ghost-content:

db-data:

# redis-data: # Uncomment if you want Redis persistenceThis configuration includes 6 cache adapters covering all major Ghost query types. The keyPrefix settings help with debugging and the setup includes the necessary MySQL and Redis services.

Verifying Redis is Working

After starting the stack with docker compose up, you can verify Redis caching:

# Check if Redis is receiving queries

docker compose exec redis redis-cli info stats | grep keyspace

# Output shows cache activity:

# keyspace_hits:9 <- Successful cache hits

# keyspace_misses:8 <- Cache misses (queries that went to database)

You can also view the cached queries directly:

# View cached queries

docker compose exec redis redis-cli keys "*"

# You'll see entries like:

# ghost:postsPublic{"method":"browse","options":{"include":["authors","tags","tiers"],"limit":12,"page":1}}

# ghost:imageSizeshttps://static.ghost.org/v5.0.0/images/publication-cover.jpgIf you want to know the cache hit rate:

# Monitor cache hit rate

docker compose exec redis redis-cli info stats | grep -E "keyspace_hits|keyspace_misses" | awk -F: '{print $2}' | paste -sd' ' | awk '{printf "Hit rate: %.1f%%\n", ($1/($1+$2))*100}'The memory footprint is remarkably small:

# Get memory usage

docker compose exec redis redis-cli info memory | grep used_memory_human

used_memory_human:1.11MEven on big sites running on Magic Pages, the memory barely goes above 10MB, so the impact on any given infrastructure is minimal.

The Results: From Red to Green

I deployed the Redis configuration to CarExplore on a Monday morning. By Monday afternoon, the first results were in.

Remember those warnings that started this journey?

WARN {{#get}} helper took 556ms to complete

WARN {{#get}} helper took 633ms to complete

WARN {{#get}} helper took 589ms to completeThese warnings disappeared completely. When measuring, the {{#get}} helpers take between 120-150ms now. That's a 75% improvement in query response time.

I expected moderate improvements, but Redis exceeded expectations by dramatically reducing lookup times.

Internal metrics were promising, but the real test came from Google's PageSpeed Insights. For a fair test, I did not go through the CDN for these tests, but temporarily exposed an internal URL, so the PageSpeed Insights could take measurements directly at the source.

Mobile PageSpeed Insights

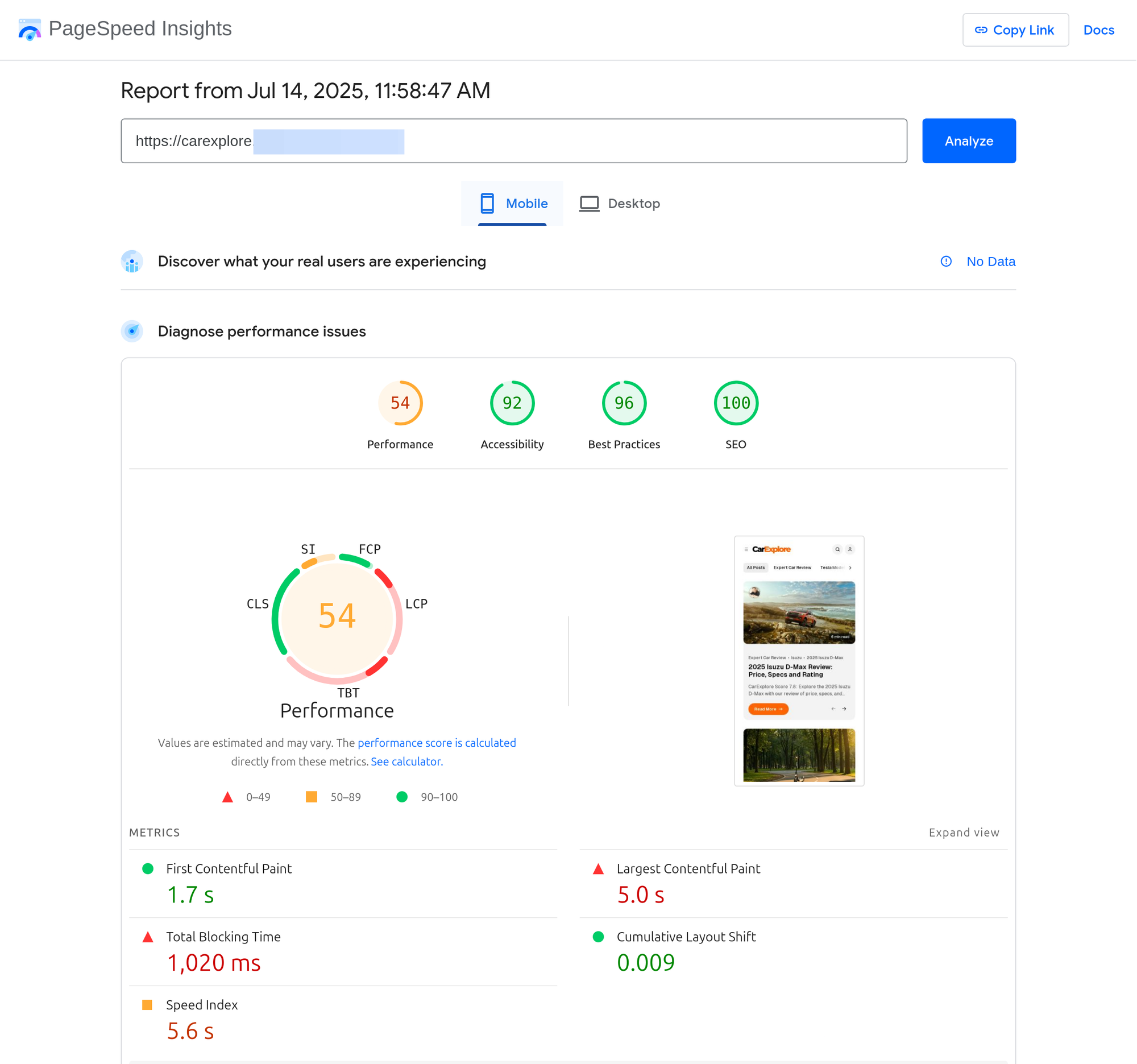

Before Redis was deployed, we hit 54 in the mobile PageSpeed Insights tests:

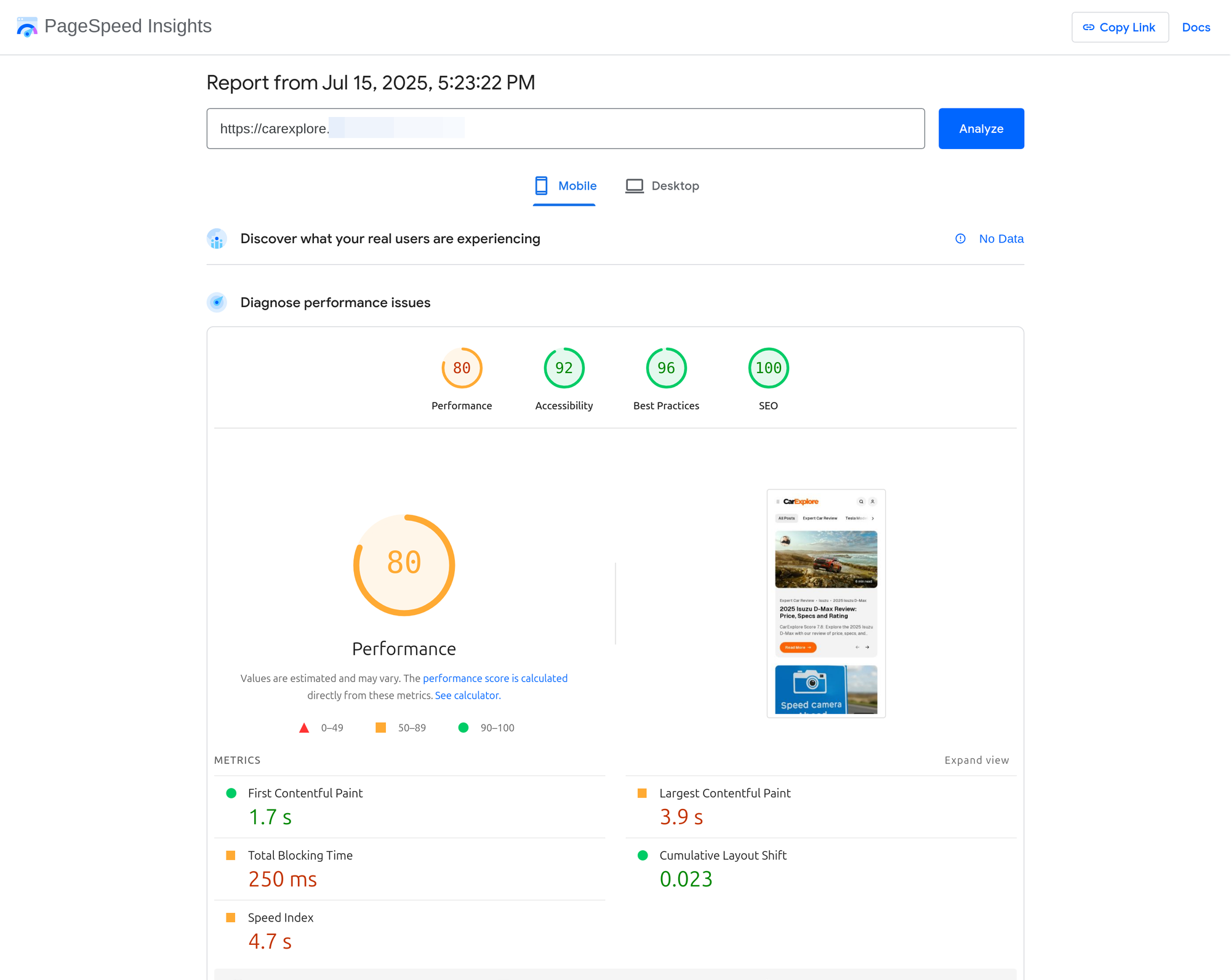

A day after the Redis was deployed (and the cache was filled nicely), we hit a score of 80:

That's a 48% improvement in mobile performance.

Desktop PageSpeed Insights

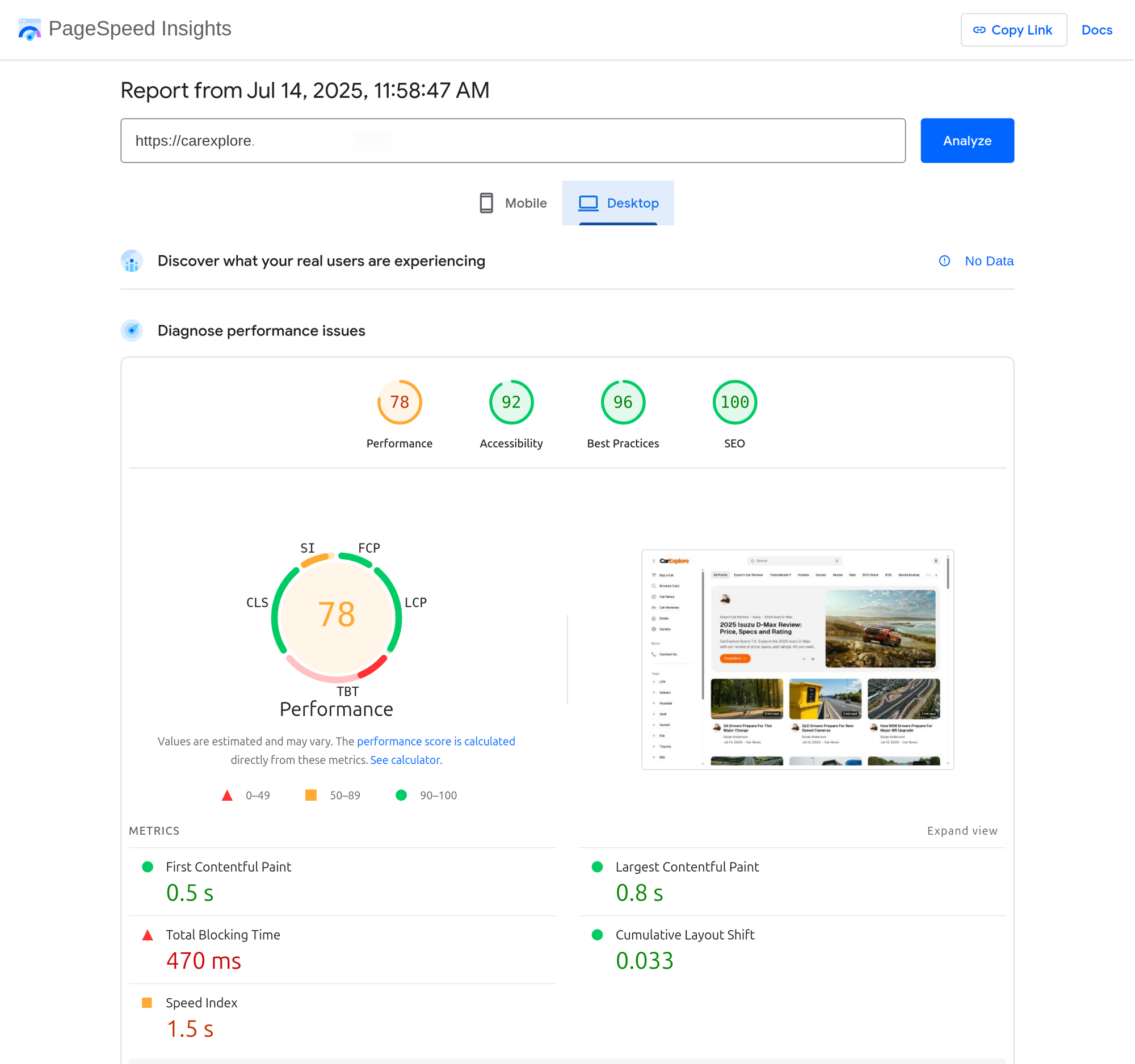

The desktop tests show the full picture. Before Redis was implemented, we hit a solid 78:

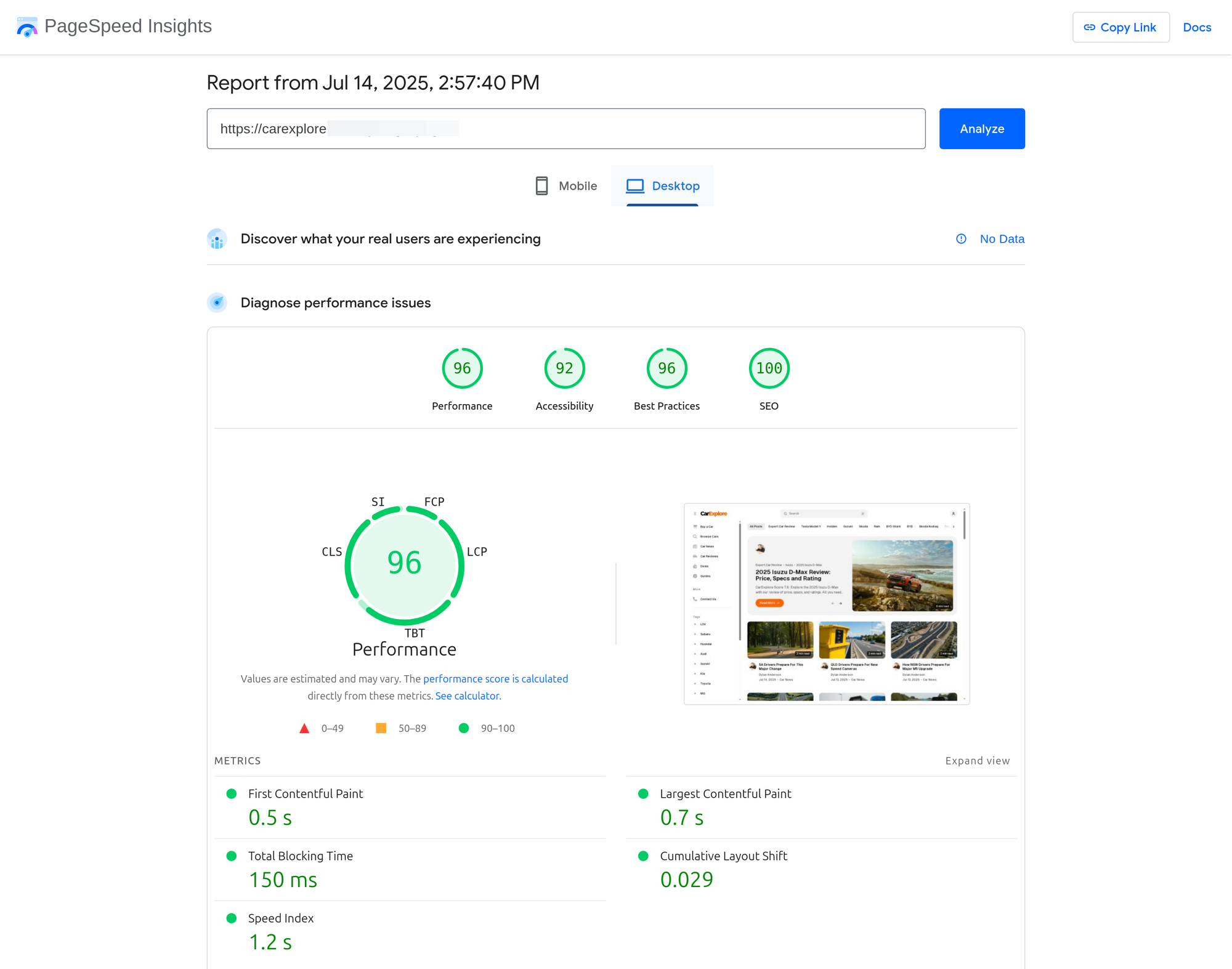

After our improvements, we then hit a 96:

That is less of a jump, but still an impressive 23% improvement.

Within a few hours, the Redis database was doing its job and got:

- Database queries: 600ms → 150ms (75% faster)

- Mobile PageSpeed: 54 → 80 (48% improvement)

- Desktop PageSpeed: 78 → 96 (23% improvement)

- Cache hit rate: 72.3%

This means 7 out of 10 database queries never touched MySQL. They were served instantly from memory. In turn, this also means that the Magic Pages MySQL cluster has less work and can therefore serve the few requests that do come through even better.

What This Means for CarExplore

Dylan and his team now have a site that responds instantly, even during traffic spikes when new car reviews go live. Their content management workflow is smoother – no more waiting for admin pages to load when they're trying to publish time-sensitive automotive news.

Most importantly, they're future-proofed. As they continue adding more car reviews and their tagging system grows more complex, the performance won't degrade like it was before.

Rolling Out to All Sites

These results proved the solution worked, but CarExplore was just the beginning. Their slow queries showed me what every growing Ghost site would eventually face.

The fix was clear: enable Redis caching for all Magic Pages sites. Not as an add-on or upgrade – just make it work for everyone.

The rollout was straightforward:

- Deploy Redis sidecars to all Ghost pods

- Enable the configuration

- Monitor performance metrics

No downtime. No configuration needed from customers. The sites just got faster.

All of this rolled out with today's update to Ghost v5.130.0. Since then, I've seen similar improvements across the board. Sites with 500+ posts see the biggest gains, but even smaller sites benefit from it.

The infrastructure cost? Minimal. Each Redis instance uses about 3-5MB of RAM. Currently, there are about 670 Ghost sites running on Magic Pages. Overall, the Redis setup uses 3.2GB of RAM. Negligible for a managed hosting setup.

The performance gain? Substantial. The load on the database cluster dropped significantly, leaving more headroom for growth, as a nice side effect.

For Those Running Ghost Elsewhere

If you're managing your own Ghost instance and seeing slow query warnings, I would suggest implementing Redis as well, especially if you're already running Ghost in a Docker setup.

The Docker Compose configuration in this post will get you started. Adjust the TTLs based on how often your content changes. And benefit!

About Jannis Fedoruk-Betschki

I'm the founder of Magic Pages, providing managed Ghost hosting that makes it easy to focus on your content instead of technical details.